Advanced Evaluation Methodologies

Interpreting Classification Accuracy

While accuracy serves as the most intuitive performance measure, its usefulness diminishes with imbalanced datasets. A model achieving 95% accuracy might be completely ineffective if the minority class represents critical cases. This paradox necessitates deeper analysis beyond surface-level metrics.

Accuracy measurements also fail to distinguish between different error types. Understanding where and how models fail proves more valuable than knowing how often they succeed.

Precision-Recall Dynamics

These complementary metrics address accuracy's limitations by focusing on specific prediction aspects:

- Precision: Minimizing false alarms

- Recall: Ensuring comprehensive detection

The F1-score harmonizes these competing priorities, though the optimal balance varies by application. Medical screening might tolerate more false positives to catch all actual cases, while legal document review would demand near-perfect precision.

Confusion Matrix Analysis

This tabular representation breaks down predictions into four categories:

- True Positives (correct detections)

- False Positives (incorrect alarms)

- True Negatives (correct rejections)

- False Negatives (missed detections)

Examining these components individually reveals patterns that aggregate metrics obscure. For instance, consistent errors on a particular class might indicate inadequate training data representation.

ROC/AUC Interpretation

Receiver Operating Characteristic analysis evaluates model performance across all possible decision thresholds. The Area Under Curve metric quantifies overall discriminative power:

- 0.9-1.0: Excellent separation

- 0.8-0.9: Good discrimination

- 0.7-0.8: Moderate usefulness

- 0.5-0.7: Limited value

This approach proves particularly valuable when error costs vary significantly between false positives and negatives.

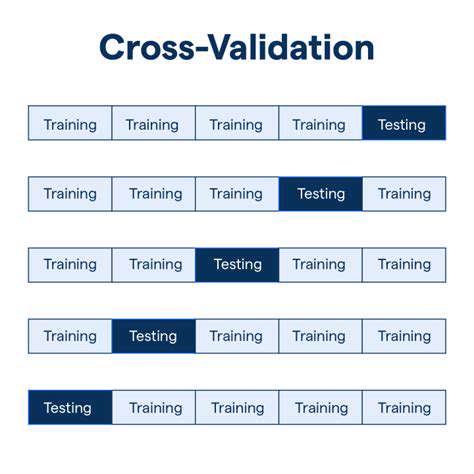

Cross-Validation Procedures

K-fold cross-validation represents the gold standard for reliable performance estimation:

- Randomly partition data into K equal subsets

- Iteratively use K-1 folds for training, 1 for testing

- Average results across all iterations

This method minimizes the impact of data partitioning randomness while efficiently using available samples. Cross-validated metrics provide the most trustworthy performance estimates.

Practical Implementation Considerations

While quantitative metrics guide development, practical deployment requires additional considerations:

| Factor | Impact |

|---|---|

| Computational Cost | Affects real-world feasibility |

| Interpretability | Determines stakeholder trust |

| Maintainability | Influences long-term viability |

The best model often represents the optimal balance between statistical performance and operational practicality.