Evaluating and Optimizing Transfer Learning Models for Audio

Understanding Transfer Learning in Audio

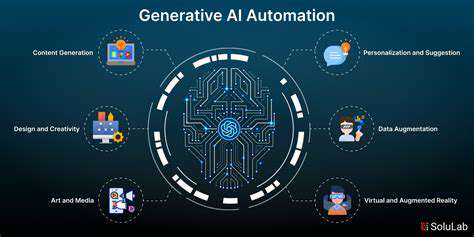

Transfer learning, a powerful technique in machine learning, leverages knowledge gained from one task to improve performance on a related but different task. In the context of audio, this means pre-trained models trained on massive datasets of audio signals can be adapted to new, smaller, or more specific audio classification tasks, dramatically reducing the need for extensive training data. This approach significantly speeds up development time and often yields higher accuracy compared to training a model from scratch on a limited dataset.

Pre-trained Models for Audio Tasks

Several pre-trained models excel in various audio applications. For instance, models trained on large-scale speech datasets can be fine-tuned for tasks like speaker identification or speech recognition. Similarly, models trained on music datasets can be adapted to tasks like genre classification or instrument recognition. Understanding the strengths and weaknesses of these pre-trained models is crucial for selecting the most appropriate one for a given audio task.

Exploring different architectures, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), is essential for choosing the model best suited for the desired audio input and desired outcome. Experimentation is key to identifying the optimal model for a specific audio classification problem.

Evaluating Transfer Learning Model Performance

Assessing the effectiveness of a transfer learning model is critical. Metrics like precision, recall, F1-score, and accuracy are commonly used to evaluate the model's performance on a validation set or test set. These metrics provide quantitative insights into the model's ability to correctly classify different audio classes. Comparing these results against baseline models trained from scratch can further highlight the benefits of transfer learning.

Optimizing Transfer Learning for Specific Audio Tasks

Fine-tuning the pre-trained model parameters is a crucial step in optimizing its performance for a particular audio task. Techniques like adjusting the learning rate, using different optimization algorithms, and adding or removing layers in the model can significantly impact the final results. Careful consideration of these hyperparameters is essential for achieving optimal performance.

Addressing Data Imbalance and Bias in Audio Data

Audio datasets can often exhibit imbalances in class distributions, where some audio classes are significantly underrepresented compared to others. This can lead to biased models that perform poorly on the underrepresented classes. Addressing these issues through techniques like data augmentation, class weighting, or using specialized loss functions can create more robust and fair transfer learning models for audio. A nuanced understanding of the data distribution is critical for developing effective strategies to handle potential bias.

Deployment and Integration Considerations for Audio Models

Once a transfer learning model is optimized, its deployment and integration into existing systems must be carefully considered. Factors like computational resources, latency requirements, and real-time processing constraints need to be addressed. Moreover, ensuring compatibility with existing infrastructure and user interfaces is vital for successful implementation. Deploying the model in a production environment requires careful consideration of its resource usage and operational efficiency.