Edge AI Deployment Strategies

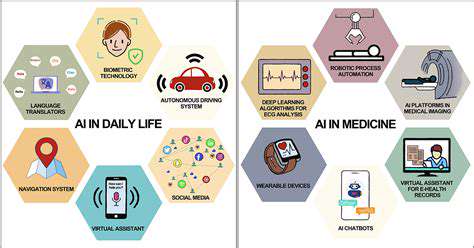

Implementing Edge AI involves carefully choosing the right deployment strategy. This crucial step requires evaluating factors like data volume, latency requirements, and available computing resources. Choosing a suitable edge device is paramount for optimal performance and efficiency. Deploying AI models directly on the edge often results in faster processing times, crucial for real-time applications. This allows for quicker responses and reduced reliance on cloud infrastructure, which can be beneficial in scenarios with limited or unreliable internet connectivity.

Different deployment strategies include deploying pre-trained models, training models on the edge, or a hybrid approach. Each method has its own advantages and disadvantages, and the ideal choice depends on the specific use case. Carefully weighing the trade-offs between model complexity, data privacy, and computational resources is essential during the deployment phase.

Data Ingestion and Preprocessing

Efficient data ingestion is critical for successful Edge AI implementation. A robust pipeline for collecting data from various sources and preparing it for model input is vital. Thorough data preprocessing techniques are necessary to ensure data quality and accuracy. This includes steps like cleaning, transforming, and formatting data to meet the specific requirements of the deployed AI model.

Addressing potential data inconsistencies, outliers, and missing values is essential. This ensures that the model receives reliable data for training and accurate predictions. Proper data preprocessing significantly improves the performance and reliability of the deployed AI model.

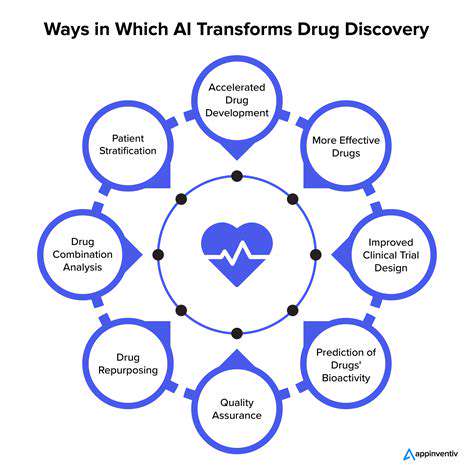

Model Selection and Optimization

Selecting the appropriate AI model is a key aspect of Edge AI implementation. Consider factors like model accuracy, complexity, and computational requirements. Choosing a model that aligns with the specific needs of the application is crucial for achieving optimal results. Lightweight models are often preferred for edge deployments due to the limitations of computational resources on edge devices.

Optimizing the model for edge deployment is also important. Techniques like quantization and pruning can significantly reduce the model size and improve inference speed without sacrificing accuracy. This optimization process is critical to realizing the performance advantages of Edge AI.

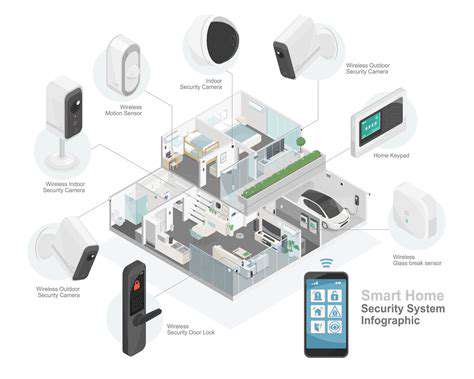

Security Considerations

Edge AI systems often handle sensitive data, making security a top priority. Robust security measures are essential to protect against unauthorized access, data breaches, and malicious attacks. Implementing strong authentication and authorization mechanisms is critical to safeguarding data integrity and confidentiality. Data encryption throughout the data lifecycle, from collection to processing, is essential for maintaining security.

Regular security audits and vulnerability assessments are crucial. Staying updated on the latest security threats and implementing appropriate countermeasures is vital to maintaining a secure Edge AI environment.

Real-time Performance Monitoring

Real-time performance monitoring and analysis are crucial to ensure the smooth and efficient operation of Edge AI systems. Monitoring key metrics like latency, accuracy, and resource utilization is vital for identifying and addressing performance bottlenecks. Implementing monitoring tools and dashboards allows for proactive identification of potential issues and timely interventions.

Continuously tracking the performance of the AI models deployed on the edge helps identify any degradation in performance or accuracy over time. This allows for proactive adjustments to maintain optimal results. Regular analysis of performance metrics provides valuable insights to identify areas needing improvement.

Deployment on Various Edge Devices

Edge AI deployments need to be adaptable to various edge devices, ranging from embedded systems to specialized hardware. Different edge devices offer varying computational capabilities, and models need to be tailored accordingly. Assessing the capabilities of each device is crucial for choosing the right model and optimizing its performance.

Developing scalable solutions for deployment across different edge devices is essential. This allows for seamless integration and management of AI models across a variety of hardware platforms. This adaptability is key to successful Edge AI implementations.

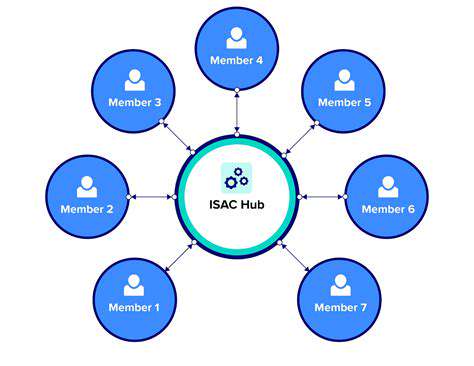

Integration with Existing Systems

Integrating Edge AI solutions with existing systems is a critical step. Smooth integration with existing infrastructure is essential for seamless operation and value realization. Careful planning and design are needed to avoid disrupting existing processes. Integrating with existing data pipelines and monitoring systems ensures a seamless transition into the Edge AI ecosystem.

Careful consideration must be given to the communication protocols and data formats used by the existing systems. This ensures data consistency and efficient flow throughout the entire system.