Predictive Modeling for Future Journey Optimization

Predictive Modeling Techniques

The predictive modeling toolkit ranges from straightforward statistical methods to sophisticated machine learning approaches. Choosing the right technique isn't about chasing complexity—it's about matching the tool to the specific business question and data characteristics. While linear regression offers transparency for simple relationships, neural networks can uncover patterns in massive, complex datasets where human analysts might miss connections.

One often overlooked aspect is model interpretability. The most accurate model isn't always the best choice if stakeholders can't understand how it reaches conclusions. Finding the sweet spot between predictive power and explainability remains one of data science's greatest challenges—and opportunities.

Data Preparation and Feature Engineering

Before any modeling begins, data scientists spend considerable time preparing their raw materials. Missing values get imputed, outliers are addressed, and variables are transformed to comparable scales. This unglamorous work often makes the difference between a mediocre model and a transformative one. Like a chef preparing ingredients before cooking, proper data preparation sets the stage for success.

Feature engineering represents the creative side of data science. It's here that domain expertise meets technical skill to create variables that capture meaningful patterns. The most impactful models often result from insightful feature engineering rather than algorithm selection alone. Sometimes, combining two existing variables in a novel way or creating a time-based metric reveals relationships that would otherwise remain hidden.

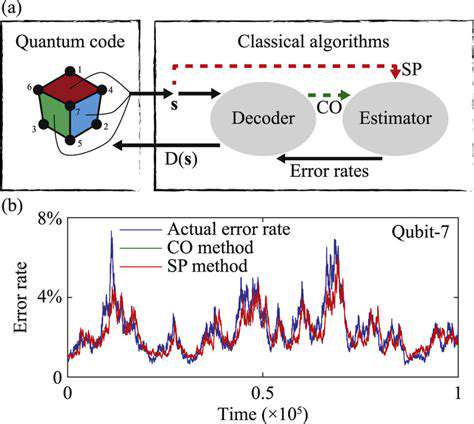

Model Evaluation and Validation

Rigorous testing separates reliable models from statistical flukes. The gold standard involves reserving portions of data specifically for testing—data the model never saw during training. Performance metrics tell part of the story, but understanding where and why a model fails proves equally important. For example, high overall accuracy might mask poor performance on critical customer segments.

Advanced techniques like k-fold cross-validation provide more robust assessments by testing models across multiple data subsets. This thorough approach guards against the all-too-common pitfall of models that work beautifully in development but fail in production. Ultimately, proper validation builds confidence that predictions will hold up when real business decisions are at stake.