Ethical Considerations and Regulatory Implications

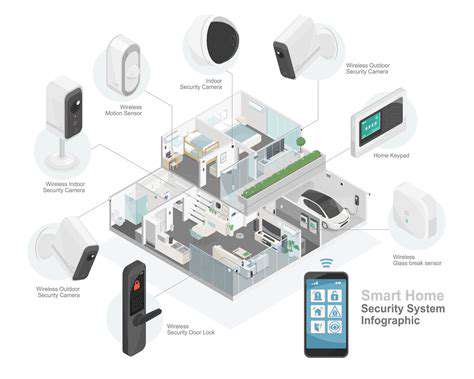

Data Privacy and Security

Deep learning models for medical image analysis often require access to large datasets of sensitive patient information. This necessitates stringent data privacy protocols, ensuring compliance with regulations like HIPAA (Health Insurance Portability and Accountability Act) in the US and GDPR (General Data Protection Regulation) in Europe. Protecting patient confidentiality throughout the entire data lifecycle, from acquisition to model training and deployment, is paramount. Robust encryption methods and secure storage solutions are crucial to prevent unauthorized access and breaches. Furthermore, models should be designed to anonymize patient data wherever possible while maintaining the necessary information for accurate analysis.

The use of synthetic data or federated learning approaches can help mitigate data privacy concerns. Synthetic data generation techniques create realistic, but anonymized, datasets for model training, reducing the reliance on real patient data. Federated learning, on the other hand, trains models on decentralized data sources, allowing for model training without transferring sensitive patient information to a central location. These approaches are becoming increasingly important for addressing the ethical and regulatory challenges associated with deep learning in medical imaging.

Bias and Fairness in Model Training

Deep learning models are only as good as the data they are trained on. If the training dataset reflects existing societal biases, the resulting model may perpetuate and even amplify these biases in its diagnostic or treatment recommendations. For example, if a dataset predominantly features images from patients of a particular ethnicity or socioeconomic background, the model might perform less accurately on images from other groups, leading to potentially harmful disparities in care.

Careful attention must be paid to the diversity and representativeness of the training data to minimize bias. Methods for detecting and mitigating bias in the data and model outputs need to be developed and implemented. Regular audits and evaluations of the model's performance across different demographic groups are crucial to ensure fairness and equitable access to advanced medical imaging technologies.

Regulatory Approvals and Standards

The deployment of deep learning models in medical image analysis requires careful consideration of regulatory approvals and standards. Different jurisdictions have varying regulations regarding the use of medical devices and software, and it is essential to adhere to these standards. This often involves rigorous testing, validation, and certification processes to ensure the accuracy and safety of the models. The regulatory landscape is constantly evolving, and healthcare organizations must stay informed about the latest guidelines and requirements.

Clear documentation of the model's development process, including data sources, training methods, and validation results, is essential for regulatory compliance. Transparency in the model's operation and decision-making processes is crucial for building trust and ensuring accountability. Furthermore, robust mechanisms for monitoring and evaluating the model's performance in real-world clinical settings are needed to identify and address potential issues after deployment. This ongoing evaluation is essential for maintaining the safety and efficacy of deep learning models in medical imaging applications.

Liability and Accountability

Determining liability in cases where a deep learning model makes an incorrect diagnosis or prediction is a complex issue. Understanding the extent of the model's responsibility and the liability of the developers, healthcare providers, and institutions involved is crucial. Clear guidelines and regulations are needed to address the question of accountability for errors made by these sophisticated systems. Establishing mechanisms for reporting and investigating errors, along with processes for corrective action, is essential.

Liability issues in deep learning medical image analysis require careful consideration and collaboration between stakeholders, including developers, clinicians, and regulatory bodies. The development of clear guidelines and standards for model validation, deployment, and ongoing monitoring is necessary to ensure responsible use and mitigate potential risks. Transparency and communication are essential to fostering trust and accountability in the use of these powerful technologies in healthcare.