The Growing Role of AI in Education

AI-Powered Personalized Learning

The potential of AI to tailor educational experiences to individual student needs is immense. AI algorithms can analyze student performance data, identify learning gaps, and recommend personalized learning paths. This allows educators to focus on providing targeted support where it's most needed, fostering a more effective and engaging learning environment. Personalized learning experiences can lead to increased student motivation and improved academic outcomes, as students are more likely to be successful when learning materials and pace are adapted to their unique learning styles and capabilities.

This individualized approach can also help students who struggle with traditional learning methods. By adapting the curriculum to specific needs, AI can help bridge learning gaps and create more equitable educational opportunities for all students, regardless of their background or learning style.

Ethical Considerations in Data Privacy

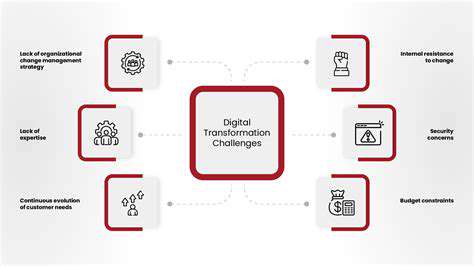

As AI systems in education collect and analyze vast amounts of student data, safeguarding student privacy and data security becomes paramount. Robust data protection measures and transparent data usage policies are crucial to ensure that student information is handled responsibly and ethically. Clear guidelines on data collection, storage, and use must be established and rigorously enforced to prevent misuse and ensure compliance with relevant privacy regulations.

Bias Mitigation in AI Algorithms

AI algorithms are trained on data, and if that data reflects existing societal biases, the algorithms can perpetuate and even amplify those biases in educational settings. It's essential to identify and mitigate potential biases in AI systems used in education to prevent unfair or discriminatory outcomes. This requires careful data curation, algorithm design, and ongoing evaluation to ensure fairness and equity in AI-driven educational interventions.

The Role of Human Educators

While AI can significantly enhance the learning experience, it's crucial to recognize that human educators remain indispensable. AI should be seen as a tool to augment, not replace, the critical role of teachers in fostering creativity, critical thinking, and emotional intelligence in students. Human interaction and mentorship are essential for developing well-rounded individuals who can navigate complex challenges in the future.

Accessibility and Inclusivity

AI tools have the potential to make education more accessible and inclusive for students with diverse learning needs. AI-powered tools can translate languages, provide real-time captioning, and offer alternative learning formats, ensuring that students with disabilities or learning differences can participate fully in the educational process. However, ensuring equitable access to these technologies and training teachers on their use is essential to realize their full potential.

The Future of AI in Education

The integration of AI in education is still in its early stages, and the potential applications are constantly evolving. As AI technology advances, we can anticipate even more sophisticated and personalized learning experiences. This includes the development of AI tutors that can provide instant feedback and support, intelligent assessment platforms that evaluate student progress in real-time, and virtual learning environments that adapt to the specific needs of each student.

Accountability and Transparency in AI Systems

To build trust and ensure responsible use, AI systems in education must be designed with transparency and accountability in mind. Students and educators need to understand how AI tools operate and what data they collect. Clear mechanisms for feedback and redress should be in place to address any concerns or issues that arise from the use of AI in educational settings. Maintaining ongoing dialogue between educators, students, and AI developers is crucial for shaping the responsible integration of AI into education.

Ensuring Data Privacy and Security

Protecting User Data in AI Systems

Data privacy is paramount when developing AI systems. We must prioritize robust mechanisms for safeguarding sensitive user information throughout the entire lifecycle of the AI, from data collection and storage to processing and eventual disposal. This includes implementing strong encryption protocols, access controls, and regular security audits. Failing to adequately protect user data not only poses a significant risk to individuals but also can damage the reputation of the organization and lead to legal repercussions. Furthermore, transparent data policies and clear communication with users about how their data is being used are crucial for building trust and fostering ethical AI practices.

The specific measures required will vary depending on the nature of the AI system and the type of data it handles. For example, AI systems used for healthcare applications require even more stringent security protocols than those used for social media engagement. Developing comprehensive data protection plans that address these varying needs is essential for ensuring user trust and compliance with regulations like GDPR or CCPA.

Implementing Secure Data Handling Practices

Beyond basic security measures, responsible AI development demands a commitment to secure data handling practices. This means implementing rigorous processes for data validation, sanitization, and anonymization. Properly validating data ensures that it's accurate and reliable, reducing the risk of biased or inaccurate outputs from the AI model. Sanitization techniques help remove sensitive or irrelevant information, while anonymization transforms data to protect individual identities. These procedures are not just technical; they are ethical considerations that demonstrate a commitment to responsible AI development.

Regular security audits and vulnerability assessments are critical components of a robust data security strategy. These assessments help identify potential weaknesses and vulnerabilities in the AI system's architecture and data handling processes. Addressing these vulnerabilities proactively minimizes the risk of data breaches and ensures the ongoing security of the AI system and the data it processes. This commitment to proactive security is a crucial aspect of responsible AI development.

Establishing Transparency and Accountability

Transparency and accountability are essential pillars of ethical AI development. Users should be informed about how their data is used by the AI system and the potential implications of that use. Clear and concise explanations of the AI's decision-making processes, including the rationale behind specific choices, build trust and foster understanding. This not only helps users feel comfortable with the system but also allows for greater scrutiny and accountability if issues arise.

Establishing clear lines of accountability within the organization is crucial for addressing any potential misuse or unintended consequences of the AI system. Having designated individuals or teams responsible for oversight and remediation of issues fosters trust and encourages proactive measures to prevent ethical breaches. This commitment to accountability is integral to ensuring the responsible use of AI and minimizing its potential negative impacts.

Promoting Transparency and Explainability in AI Tools

Promoting Openness in Decision-Making

Transparency is crucial for building trust and fostering accountability in any organization. Open communication about decision-making processes, including the rationale behind choices, allows stakeholders to understand the context and rationale behind decisions. This understanding, in turn, can lead to increased acceptance and support for those decisions. Furthermore, it fosters a more collaborative environment where diverse perspectives are valued and considered.

By actively sharing information and engaging with stakeholders, organizations demonstrate a commitment to ethical practices. This proactive approach can significantly reduce the likelihood of misunderstandings and conflicts, ultimately leading to more positive outcomes.

Enhancing Accountability Through Clarity

Clear communication about responsibilities and expectations is essential for holding individuals and teams accountable. When everyone understands their roles and the standards to which they are expected to adhere, it becomes easier to track progress and identify areas where improvement is needed. This clarity also helps build a culture of responsibility where individuals feel empowered and motivated to contribute effectively.

Accountability hinges on transparency; when decisions and actions are clearly explained, individuals are more likely to take ownership of their contributions and understand the impact of their work on the organization's goals. This fosters a stronger sense of shared purpose.

Fostering Collaboration Through Shared Understanding

Promoting transparency and explainability creates a shared understanding of the organization's goals and objectives. This shared understanding is a cornerstone of effective collaboration, as team members can work together more effectively when they are aligned on the bigger picture and understand the rationale behind individual tasks. This alignment fosters a supportive work environment where individuals feel comfortable seeking clarification and offering constructive feedback.

Open communication channels facilitate collaboration; when teams can openly discuss challenges and solutions, they are more likely to arrive at innovative and effective strategies. This collaborative approach can lead to a more dynamic and creative work environment.

Improving Stakeholder Engagement and Trust

Transparency and explainability are essential for building trust with stakeholders. When stakeholders understand the rationale behind decisions and the processes involved, they are more likely to trust the organization and its leadership. This trust is critical for maintaining positive relationships and fostering long-term support. Stakeholders are more likely to be engaged when they feel heard and understood.

Openness and clarity are key to maintaining strong relationships with stakeholders; when organizations are transparent about their objectives and decision-making processes, stakeholders are more likely to support the organization's goals. This support is crucial for long-term success.

Fostering Human-AI Collaboration in the Classroom

Defining the Landscape of Human-AI Collaboration

The integration of Artificial Intelligence (AI) into educational settings presents a unique opportunity to revolutionize the learning experience. This requires a careful and thoughtful examination of how AI can augment human teachers, rather than replace them. We need to move beyond simple automation and explore collaborative models where AI tools enhance pedagogical approaches, tailor learning experiences, and provide personalized support for students. AI has the potential to drastically improve accessibility and equity in education, but only if ethically sound design principles guide its development and implementation.

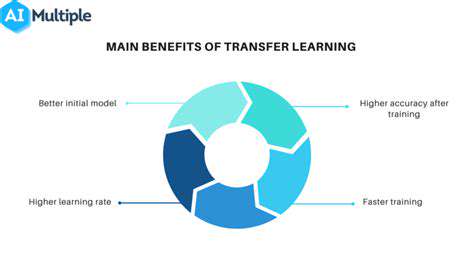

Personalized Learning Pathways through AI

AI algorithms can analyze vast datasets of student performance, learning styles, and even emotional responses to create highly personalized learning paths. This allows for targeted interventions and customized content delivery, ensuring that each student receives the support they need to thrive. Imagine a system that dynamically adjusts difficulty levels, recommends supplementary resources, and anticipates potential learning gaps before they arise. AI can empower both teachers and students by providing a more dynamic and efficient learning environment.

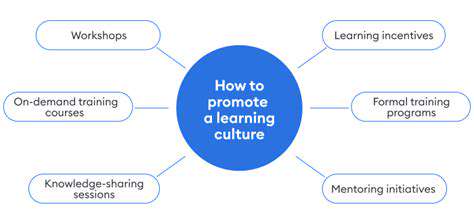

Enhancing Teacher Expertise with AI Support Tools

AI tools can act as valuable assistants to teachers, freeing up valuable time and mental space for more meaningful interactions with students. These tools can automate administrative tasks, provide data-driven insights into student progress, and even offer personalized lesson planning suggestions. Teachers can use AI to focus on fostering critical thinking, creativity, and emotional intelligence, the very aspects that AI struggles to replicate. By augmenting, not replacing, human teachers, AI can create a powerful synergy in the classroom.

Addressing Equity and Accessibility through AI-Powered Resources

AI can significantly improve accessibility and equity in education by providing tools and resources tailored to diverse learning needs. Imagine students with learning disabilities or different cultural backgrounds benefiting from AI-powered translation services, personalized learning materials, and adaptive assessment tools. AI can help bridge the gap between students with varying levels of access to resources and opportunities, fostering a more inclusive and equitable learning environment for all.

Ethical Considerations in AI-Driven Education

The ethical implications of deploying AI in the classroom are paramount. Privacy concerns regarding student data must be addressed through robust security protocols and transparent data usage policies. Bias in AI algorithms can perpetuate existing inequalities, so rigorous testing and careful algorithm design are crucial to mitigate these risks. Educators must actively participate in shaping the ethical development and deployment of AI in education, ensuring that it serves to enhance human potential rather than create further disparities.

Fostering Critical Thinking and Creativity in the AI Age

While AI can automate certain tasks and provide personalized learning experiences, it is crucial to emphasize the development of human skills that AI cannot replicate. Critical thinking, problem-solving, creativity, and collaboration are essential for navigating the complexities of the modern world. Educators must guide students to develop these critical skills, leveraging AI tools to amplify learning but never replacing the need for human interaction and mentorship. AI should be a tool to enhance human potential, not a replacement for it.