Continuous Monitoring for Early Detection

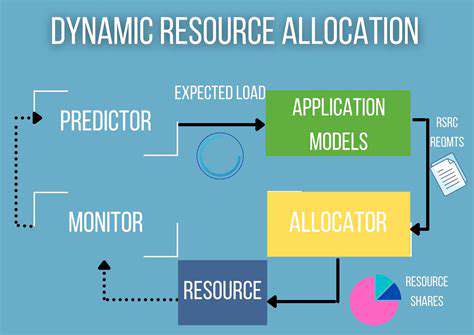

AI audits are no longer a one-time event; the future of AI audits emphasizes continuous monitoring and early detection of potential issues. This proactive approach allows organizations to address biases, inaccuracies, and ethical concerns before they escalate into significant problems. Continuous monitoring provides real-time insights into AI model performance, helping organizations maintain accountability and trust in their AI systems. This dynamic approach ensures that AI models remain aligned with organizational values and legal requirements.

By continuously evaluating data inputs, model outputs, and decision-making processes, organizations can identify anomalies and deviations from expected behavior. Early detection is crucial for mitigating risks and ensuring fairness and transparency in AI deployments. This proactive approach also allows for timely adjustments to algorithms, data sets, and processes.

Enhanced Transparency and Explainability

Future AI audits will prioritize enhanced transparency and explainability. This means developing methods for comprehending how AI systems arrive at their conclusions, making the reasoning behind AI decisions accessible and understandable. Clearer explanations are essential for building trust and fostering confidence in AI systems, particularly in high-stakes applications like healthcare and finance. Transparency in AI processes is vital for identifying and rectifying potential errors or biases.

Explainable AI (XAI) techniques will play a critical role in achieving this goal. These techniques will help demystify complex AI algorithms, enabling stakeholders to understand the factors influencing AI decisions. This will empower organizations to address ethical concerns and ensure fairness and accountability in AI deployments.

Integration with DevOps Practices

AI audits will seamlessly integrate with DevOps practices, ensuring that AI models are consistently monitored and evaluated throughout their lifecycle. This integration will enable continuous improvement in AI models and systems, leading to more robust and reliable AI deployments. This integration requires careful consideration of version control, deployment pipelines, and logging mechanisms for AI systems.

By integrating AI audits into DevOps workflows, organizations can streamline the process of testing, deploying, and maintaining AI systems. This integration can help organizations gain a holistic view of their AI systems, from development to production. This approach will enable them to identify and rectify issues in real-time, reducing the risk of errors and enhancing the overall efficiency of AI deployments.

Focus on Data Quality and Bias Detection

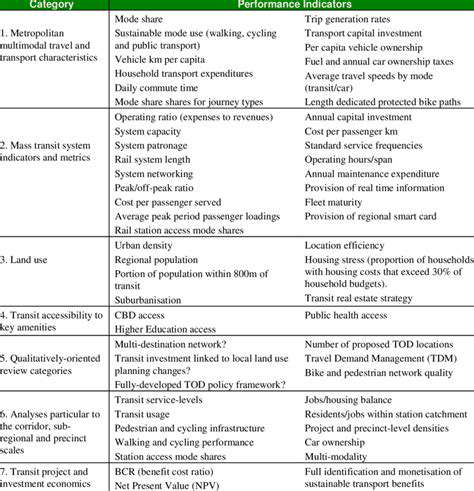

A critical component of future AI audits will be a sharp focus on data quality and bias detection. Organizations need to ensure that the data used to train AI models is accurate, comprehensive, and representative of the target population. Robust data quality is essential for the reliability of AI models and for ensuring that they are free from biases.

Advanced techniques for detecting and mitigating biases in datasets will be paramount. These techniques will help organizations build AI systems that are fair, equitable, and inclusive. This focus on data quality and bias detection will help address ethical concerns and maintain public trust in AI technologies.

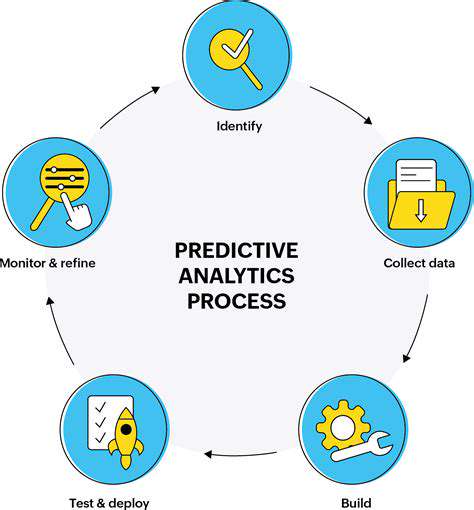

Automated Audit Frameworks and Tools

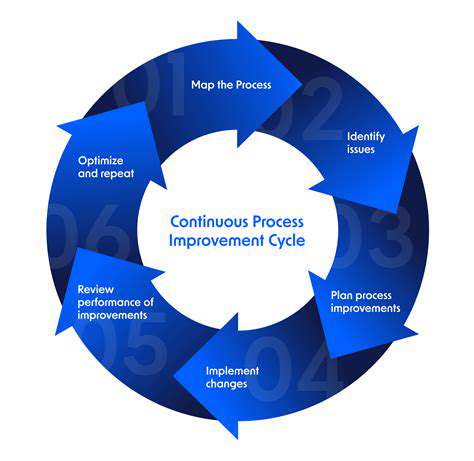

The future of AI audits will rely heavily on automated audit frameworks and tools. These tools will automate the process of identifying and assessing risks in AI systems, saving time and resources. This automation will allow organizations to perform audits more frequently and comprehensively, ensuring that AI models and systems are continuously aligned with organizational values.

These automated tools will also help to streamline the process of reporting and remediation, improving the overall efficiency of AI audit processes. The development and deployment of these tools will be critical to scaling AI audits across organizations of all sizes.