Practical Approaches to Reduce AI Bias

Building Better Datasets

Thoughtful data gathering forms the foundation of unbiased AI. We must examine sources for hidden prejudices and historical context. Techniques like data cleaning help, but true fairness requires ensuring all relevant groups receive proper representation in training materials.

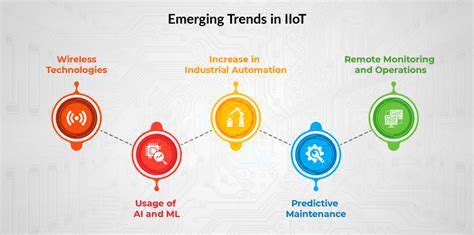

Designing Fairer Algorithms

The choice of algorithm significantly impacts bias. Some newer models incorporate fairness constraints directly into their design, helping prevent discrimination based on protected characteristics. Evaluation metrics also need reconsideration - traditional measures might overlook important fairness considerations.

New Metrics for Fairness

We need specialized measurements that assess how AI treats different groups, going beyond simple accuracy. Tools like disparate impact analysis help identify where systems might create unfair advantages or disadvantages.

Cleaning the Data Pipeline

Since bias often originates in training data, we must implement thorough screening processes. This might involve augmenting underrepresented groups or conducting targeted collection efforts to fill gaps.

Opening the Black Box

Understanding AI decision-making is crucial for spotting bias. More interpretable models allow developers to identify and correct prejudiced patterns that might otherwise remain hidden.

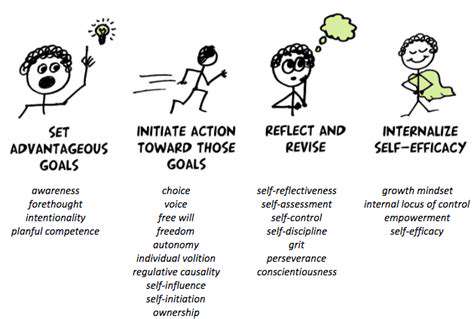

Continuous Improvement

Bias can emerge over time, making ongoing monitoring essential. Regular testing across different demographics helps ensure systems remain fair as they operate in the real world.

The Human Safety Net

Despite technological advances, human judgment remains irreplaceable. Experts must review AI outputs for subtle discrimination, maintaining the ability to override questionable decisions when necessary.

Building Trust Through Transparency

Demystifying AI Decisions

Creating trustworthy AI requires making its workings understandable to users, developers, and regulators alike. Clear explanations of decisions help identify potential biases and build confidence in the technology.

Explainability isn't just about technology - it's about responsibility. Understanding why an AI makes certain choices allows us to improve fairness and address errors.

Spotting Hidden Prejudice

Since AI learns from human-generated data, it inevitably picks up human biases. Regular audits using diverse testing methods can uncover these issues before they cause harm.

Keeping Humans in the Loop

AI should enhance human decision-making, not replace it. Clear protocols for human oversight ensure systems align with ethical values, especially in critical applications.

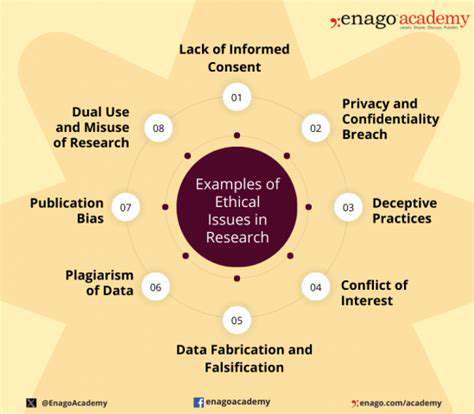

Establishing Ethical Guidelines

Developing comprehensive standards for AI creation and use helps prevent harm. These guidelines should emphasize fairness, accountability, and respect for human rights at every stage.

The Ethical Dimension of AI Progress

Balancing Innovation and Ethics

The rapid evolution of AI brings both promise and peril. As these systems permeate more aspects of daily life, we must prioritize ethical considerations to prevent harm. This means building fairness, clarity, and responsibility into every AI application.

From hiring to criminal justice, AI systems risk reinforcing existing inequalities. Only through rigorous ethical frameworks can we harness AI's potential while minimizing negative impacts.

Clear Reasoning for Better Outcomes

Many AI systems operate as inscrutable black boxes, creating distrust. The ability to understand and explain AI decisions is fundamental to building fair systems. This transparency also makes it easier to identify and fix errors in the technology.

Data Quality as a Moral Obligation

Since AI learns from historical data, that data must represent diverse perspectives. Thoughtful data collection and continuous monitoring are essential safeguards against algorithmic discrimination.

Defining Responsibility in the AI Age

As AI systems grow more autonomous, we must clarify accountability. Clear guidelines are needed to determine responsibility when AI causes harm. This requires collaboration between technologists, policymakers, and ethicists to create appropriate oversight mechanisms.