Optimizing Video Processing Algorithms for Edge Deployment

Hardware Acceleration for Enhanced Performance

Optimizing video processing algorithms for edge deployment hinges significantly on leveraging hardware acceleration. Modern GPUs and specialized processors, such as those found in edge devices, are designed to handle parallel computations efficiently. This allows for real-time processing of video streams without significant latency, a crucial factor for applications demanding immediate feedback, such as video surveillance and augmented reality. Employing optimized libraries and frameworks designed for these specialized hardware architectures is essential for achieving the desired performance gains.

Developers need to carefully consider the specific hardware capabilities of the target edge device. Different architectures offer varying levels of parallel processing power and memory bandwidth. Matching the algorithm to the available resources ensures optimal utilization and avoids bottlenecks that can compromise performance. Identifying and addressing these bottlenecks is critical for deploying efficient and responsive video processing solutions.

Algorithm Selection and Customization for Specific Needs

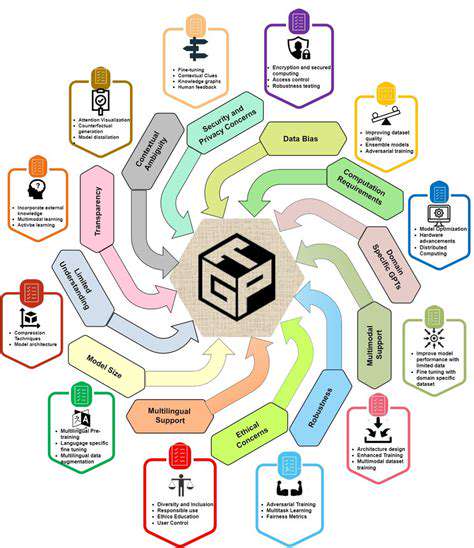

Selecting the most appropriate video processing algorithm is crucial for edge deployment optimization. Algorithms need to be tailored to the specific needs of the application. For instance, a video surveillance system may prioritize object detection and recognition, while a video conferencing application might emphasize low-latency transmission. Consequently, the choice of algorithm significantly impacts the computational complexity and resource consumption.

Customizing existing algorithms or developing novel ones to meet specific edge device constraints is often necessary. This might involve simplifying the algorithm to reduce computational load, or adapting it to leverage specific hardware features. This level of customization ensures that the algorithm operates efficiently within the limited resources of the edge device, minimizing latency and maximizing performance.

Data Compression and Representation for Bandwidth Efficiency

Efficient data compression techniques play a vital role in optimizing video processing for edge deployment. By reducing the size of the video data, bandwidth requirements are minimized, enabling smooth transmission and processing over limited network connections. Lossy compression methods, like H.264 and H.265, are commonly employed, offering a good balance between compression ratio and visual quality. Choosing the right compression level is crucial to strike the optimal balance between data size and acceptable image quality for the application.

Employing optimized data structures and representations for video data can also significantly improve bandwidth efficiency. This might involve using specialized formats that are specifically designed for edge devices. This reduction in data size translates directly to lower bandwidth consumption and faster processing times at the edge.

Real-Time Processing and Low-Latency Considerations

Real-time video processing at the edge demands algorithms that can handle incoming data streams with minimal delay. Optimizing for low latency is essential for applications that require immediate feedback, such as augmented reality and live streaming. This involves careful consideration of the algorithm's processing time and the ability to handle variable frame rates. Minimizing the time it takes to process each frame and to output the results is vital for seamless real-time operation.

Techniques like asynchronous processing, multi-threading, and task prioritization can contribute to reducing latency. Careful scheduling of tasks and efficient data pipelines are crucial to ensure that video frames are processed and transmitted in a timely manner, without introducing significant delays or interruptions in the processing pipeline.

Resource Management and Power Optimization Strategies

Managing resources effectively is paramount for deploying video processing algorithms at the edge. Edge devices often have limited processing power, memory, and energy resources. Efficient algorithms must be designed to minimize resource consumption to ensure sustained operation and avoid excessive power consumption. This is particularly important in battery-powered or resource-constrained devices.

Implementing power-saving techniques, such as dynamic voltage and frequency scaling, can significantly extend the operational lifespan of edge devices. Prioritizing energy-efficient algorithms and architectures, coupled with proactive resource management strategies, is essential for successful and sustainable edge deployment of video processing applications.