Ensuring Transparency and Explainability in AI Assessment Tools

Defining Transparency in AI Assessment Tools

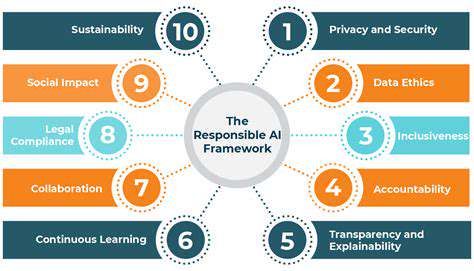

Modern assessment tools must prioritize comprehensibility alongside predictive accuracy. True transparency extends beyond simple result reporting to encompass the full decision chain - from input data characteristics to weighting mechanisms. This comprehensive visibility enables proper evaluation of system fairness and appropriateness.

Financial institutions using credit scoring algorithms provide a compelling use case. When applicants understand the specific factors affecting their ratings, they can take meaningful steps toward improvement rather than facing opaque rejections.

Understanding Explainable AI (XAI)

Explainability techniques convert algorithmic outputs into human-interpretable rationales. These might include feature importance scores, decision trees, or natural language explanations. Effective XAI balances technical precision with audience-appropriate complexity levels.

Data Provenance and Bias Mitigation

Documenting data lineage provides crucial context for evaluating potential biases. Detailed metadata should accompany training sets, specifying collection methods, demographic distributions, and preprocessing transformations. This provenance tracking enables targeted bias correction strategies.

Continuous monitoring should track how algorithm performance varies across user subgroups. Statistical parity tests can identify when certain populations experience systematically different outcomes requiring intervention.

Establishing Ethical Guidelines for Data Collection

Responsible data practices begin with clear governance policies addressing consent protocols, privacy safeguards, and authorized usage parameters. These guidelines must balance innovation needs with fundamental rights protections, particularly for vulnerable populations.

Accountability and Responsibility in AI Assessment

Clear ownership structures must define who bears responsibility for system outcomes. This includes establishing appeal processes and remediation procedures for affected parties. Liability frameworks should distinguish between developer, deployer and operator responsibilities.

Auditing and Monitoring AI System Performance

Independent audits provide essential oversight for critical systems. These evaluations should assess both technical performance and societal impact through methods like adversarial testing and counterfactual analysis. Audit frequency should correspond to system risk levels.

Ensuring Human Oversight in AI Decisions

High-consequence decisions always require human validation points. This oversight takes various forms, from approval workflows to exception handling procedures. The medical diagnostics field illustrates this principle well, where AI recommendations undergo clinician review before affecting patient care.

Promoting Collaboration and Continuous Improvement in Ethical AI Development

Enhancing Communication Channels

Cross-functional collaboration requires intentional communication infrastructure. Dedicated platforms supporting asynchronous and real-time exchanges help align distributed teams toward common objectives. Healthcare AI projects demonstrate this principle effectively, where clinicians, data scientists, and ethicists must maintain constant dialogue.

Structured knowledge-sharing sessions complement digital tools by creating space for deep discussion. These forums allow participants to surface assumptions, challenge perspectives and develop shared mental models.

Facilitating Knowledge Sharing

Systematic documentation practices transform individual expertise into organizational assets. Centralized repositories containing implementation lessons, failure analyses and success patterns accelerate collective learning. The cybersecurity field offers proven models for such knowledge management.

Institutionalizing knowledge transfer prevents critical information from becoming siloed while reducing redundant effort. Mentorship programs further reinforce this by connecting experienced practitioners with newer team members.

Building Trust and Respect

Psychological safety forms the foundation of effective collaboration. Teams perform best when members feel comfortable expressing concerns and proposing unconventional ideas. Leadership plays a crucial role in modeling constructive feedback and conflict resolution.

Recognition systems that value both individual excellence and team contributions help maintain engagement. Celebrating collaborative successes reinforces desired behaviors and strengthens group cohesion.

Establishing Clear Roles and Responsibilities

Precise role definitions prevent overlap while ensuring comprehensive coverage. RACI matrices (Responsible, Accountable, Consulted, Informed) provide clarity for cross-functional initiatives. The aviation industry's crew resource management offers proven examples of effective role structuring.

Well-articulated expectations enable team members to focus their efforts where they add greatest value. Regular role reviews ensure alignment with evolving project needs and individual growth.