Bias and Fairness in AI-Powered Security Systems

Defining Bias in AI Systems

AI systems, trained on vast datasets, can inadvertently learn and perpetuate biases present within those datasets. These biases can manifest in various forms, from gender and racial stereotypes to socioeconomic disparities. Understanding these biases is crucial to mitigating their impact and ensuring fair outcomes.

Bias in AI systems can lead to discriminatory outcomes, impacting individuals and groups in negative ways. Recognizing and addressing these biases is critical for developing responsible AI applications.

Data Collection and Representation

The quality and representativeness of the data used to train AI models significantly influence the system's output. Biased datasets, reflecting societal inequalities, can lead to discriminatory outcomes. For example, if a facial recognition system is trained primarily on images of light-skinned individuals, it may perform poorly or inaccurately on images of darker-skinned individuals.

Algorithmic Design and Implementation

The algorithms themselves can also introduce or exacerbate biases. Certain algorithms, while seemingly neutral, can amplify existing inequalities if not designed carefully. For instance, if an algorithm is used to predict recidivism risk, and that algorithm's training data disproportionately reflects biases against certain racial groups, this can lead to unfair outcomes.

Evaluation and Monitoring

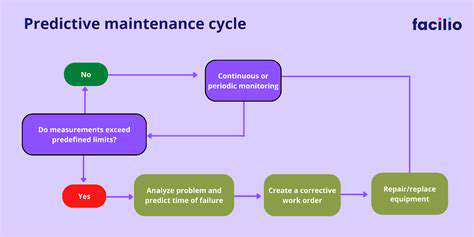

Regular evaluation and monitoring of AI systems are essential to detect and address potential biases. This involves analyzing the system's outputs and identifying patterns of discrimination. Effective monitoring strategies are crucial for ensuring fairness and accountability.

Monitoring and evaluating AI systems can reveal unintended consequences and biases embedded within the algorithm. This is crucial for identifying and mitigating these issues before they cause harm.

Impact on Different Groups

The potential consequences of AI bias can vary significantly across different demographic groups. Certain groups may face disproportionate negative impacts due to algorithmic discrimination, such as in loan applications, hiring processes, or criminal justice systems. Analyzing the impact of AI bias on specific groups helps understand the broader societal implications.

Mitigation Strategies

Various strategies can be employed to mitigate bias in AI systems. These include diverse and representative datasets, algorithm design considerations, and ongoing evaluation. Addressing bias proactively is crucial in ensuring equitable outcomes.

Using diverse and representative datasets in training is one important strategy to mitigate bias. This approach aims to ensure the AI system learns from a wide range of experiences and perspectives, thereby reducing the likelihood of perpetuating existing inequalities.

Ethical Considerations and Responsible AI

Developing and deploying AI systems responsibly requires careful consideration of ethical implications. Fairness and transparency are paramount in ensuring that AI systems benefit all members of society. This includes establishing clear guidelines and frameworks for developing and deploying AI systems in an ethical manner.

Transparency in AI algorithms is essential for understanding how decisions are made, allowing for scrutiny and accountability. This is a crucial step in building trust and ensuring fairness in AI systems.

The Future of Ethical AI in Cybersecurity

Ethical Considerations in AI-Powered Cybersecurity

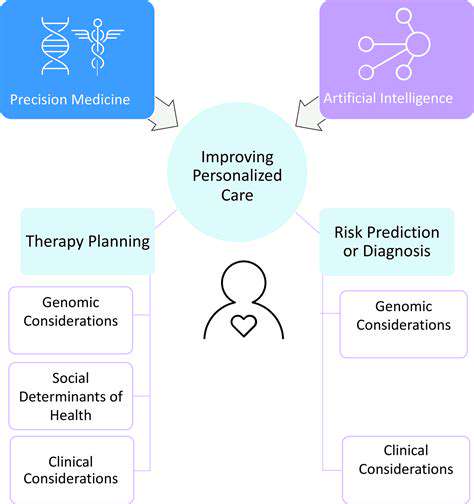

The integration of artificial intelligence (AI) into cybersecurity is rapidly transforming the landscape, offering unprecedented potential for threat detection and response. However, the deployment of AI systems in this domain raises critical ethical considerations. AI algorithms, trained on vast datasets, may inadvertently perpetuate existing biases or reflect societal prejudices, leading to discriminatory outcomes in threat analysis and response. Carefully curated and diverse datasets are crucial to mitigate this risk, ensuring fairness and equity in the application of AI-powered cybersecurity tools.

Furthermore, the increasing reliance on AI-driven systems necessitates a robust framework for accountability and transparency. Who is responsible when an AI system makes a critical error, potentially leading to a security breach? Establishing clear lines of responsibility and implementing transparent decision-making processes are paramount to ensuring user trust and confidence in these powerful technologies. This includes providing mechanisms for human oversight and intervention when necessary to prevent unintended consequences.

Ensuring Responsible AI Development in Cybersecurity

A crucial aspect of ethical AI development in cybersecurity is focusing on responsible data usage. The training of AI models relies heavily on data, and the collection, storage, and usage of this data must adhere to strict ethical guidelines. This includes obtaining informed consent from individuals whose data is used, ensuring data privacy, and adhering to relevant regulations like GDPR.

Another key element is fostering collaboration and knowledge sharing among stakeholders. Security researchers, policymakers, and industry experts must work together to develop shared ethical guidelines and best practices for AI in cybersecurity. Open dialogue and collaboration are essential to ensure that AI advancements in this field align with societal values and promote responsible innovation.

In addition, continuous monitoring and evaluation of AI systems are essential to detect and address potential biases or errors. Implementing mechanisms for regular audits and assessments can help identify and rectify any unintended consequences before they escalate into serious security breaches. Continuous improvement and iterative refinement of AI models are essential for maintaining their efficacy and mitigating potential risks. This includes incorporating feedback loops to continuously adapt and improve the systems based on real-world data and user experience.

The development and deployment of AI in cybersecurity must be guided by clear ethical principles to ensure responsible innovation. This includes prioritizing human well-being, protecting privacy, and promoting fairness and transparency. Only through a collaborative and thoughtful approach can we harness the transformative potential of AI while mitigating its inherent risks.