Protecting Student Data Privacy in AI-Powered Feedback Systems

Ensuring Data Minimization

AI-powered feedback systems often collect vast amounts of student data, raising concerns about potential over-collection. Implementing strict data minimization principles is crucial. Only the data absolutely necessary for the feedback system's intended function should be collected and stored. This includes carefully defining the specific data points required for effective analysis and feedback, and eliminating unnecessary or redundant information. Furthermore, clear guidelines and protocols should be established to ensure data is not retained beyond its necessary lifespan, promoting responsible data handling practices.

Explicit consent from students and their parents/guardians for the collection, use, and storage of data is essential. Transparency about the specific data collected, its purpose, and how it will be used needs to be clearly communicated. This proactive approach strengthens trust and demonstrates a commitment to ethical data handling practices within the learning environment.

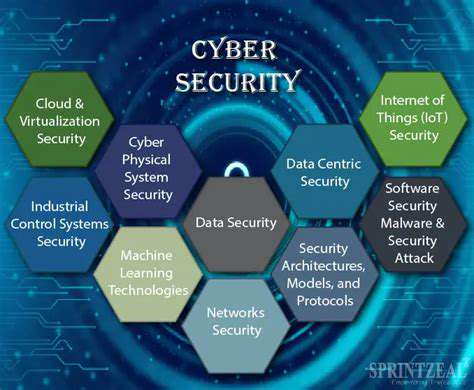

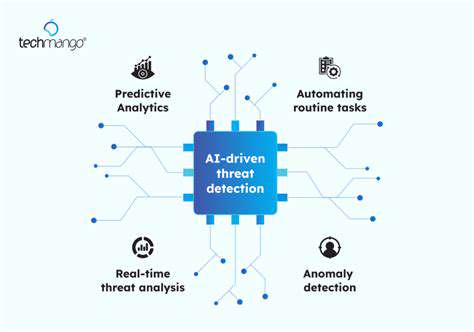

Implementing Robust Security Measures

Data security is paramount in protecting student privacy. Robust security measures, including encryption and access controls, must be implemented to prevent unauthorized access, use, disclosure, disruption, modification, or destruction of student data. Regular security audits and penetration testing should be conducted to identify and address vulnerabilities in the system.

Furthermore, clear procedures for handling data breaches should be established and communicated to ensure swift and effective responses in the event of a security incident. This proactive approach minimizes the potential impact of data breaches and safeguards the privacy of student data.

Promoting Data Anonymization and Pseudonymization

Wherever possible, student data should be anonymized or pseudonymized to remove identifying information. Anonymization removes all identifying characteristics, while pseudonymization replaces identifying information with unique identifiers. These techniques help protect student privacy by making it difficult to connect data back to specific individuals.

These methods significantly reduce the risk of re-identification and enhance the security of the data. Implementing these techniques is a crucial step in ensuring that student data privacy is preserved while still enabling the effective operation of AI-powered feedback systems.

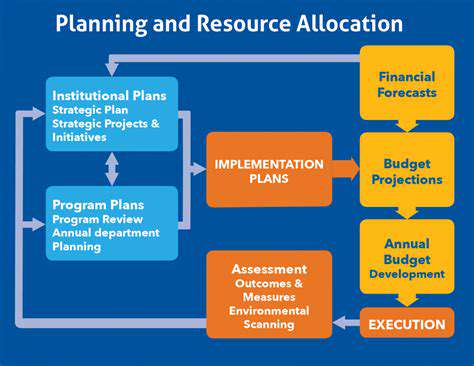

Establishing Clear Data Retention Policies

Data retention policies should be clearly defined and communicated to all stakeholders. These policies should specify the duration for which student data will be retained, the conditions under which data may be deleted or archived, and the procedures for accessing and retrieving data.

Establishing these policies ensures that student data is not stored indefinitely. It also ensures that the data is handled responsibly and ethically, reducing the likelihood of data breaches and enhancing overall data security. Clear guidelines for data disposal methods must also be in place to prevent potential misuse or re-identification of sensitive information.

Incorporating Transparency and User Control

Transparency is key. Students and their parents/guardians should be informed about how their data is being used in the AI-powered feedback systems. This includes details about the specific algorithms used, the types of feedback generated, and the potential implications of the system's analysis.

Providing students and parents with the ability to access, correct, and delete their data is essential. This empowers them to exert control over their personal information and ensures that the system is aligned with their privacy preferences. This enables a more personalized and secure learning experience for all students.

Fostering Ethical Development and Evaluation

AI-powered feedback systems should be developed and evaluated with a strong emphasis on ethical considerations. Bias in data sets and algorithms must be actively addressed to ensure fairness and equity in the feedback provided to all students. Regular evaluations should be conducted to assess the system's impact on student privacy and to identify potential vulnerabilities.

Ethical guidelines should be incorporated into the system's design and implementation phases. This ongoing process should involve input from diverse stakeholders, including educators, students, and privacy experts. These efforts will minimize the potential negative impact of AI-powered feedback systems and promote a more equitable and ethical learning environment.

Promoting Collaboration and Education

Collaboration between educational institutions, technology developers, and privacy experts is essential for developing and implementing ethical guidelines for AI-powered feedback systems. This collaboration can help to identify best practices and share knowledge and resources related to student data privacy. Education programs for educators and students about data privacy and responsible data use are crucial.

Promoting awareness and understanding of privacy rights and responsibilities among all stakeholders is paramount for effective implementation of these systems. By fostering a culture of data privacy, we can build trust and ensure that AI-powered feedback systems are used in a responsible and ethical manner that benefits all students.