Deepfakes: A New Era of Deception

Deepfakes, synthetic media that superimposes a person's likeness onto another video or audio, pose a significant threat to individuals and society. The ease with which these forgeries can be created and disseminated is alarming, allowing malicious actors to spread misinformation and disinformation on an unprecedented scale. This technology, while initially developed for creative purposes, has now become a tool for manipulation, potentially undermining trust in established media and institutions.

The implications for privacy and reputation are profound. A single, convincingly crafted deepfake can damage a person's career, relationships, and public image irrevocably. This new form of manipulation can be used for extortion, blackmail, or even political sabotage, creating scenarios where the line between reality and fabrication blurs.

The Technology Behind Deepfakes

Deepfakes leverage powerful machine learning algorithms, particularly deep neural networks, to analyze and synthesize large datasets of visual and audio information. These algorithms learn patterns and characteristics from source material, allowing them to convincingly generate new content that mimics the target's appearance and voice. The use of increasingly sophisticated algorithms continues to make these manipulations more realistic and difficult to detect.

The Impact on Trust and Credibility

The proliferation of deepfakes erodes public trust in traditional sources of information. If individuals cannot reliably distinguish between genuine and fabricated content, the very foundation of a democratic society is shaken. The ability to manipulate images and videos creates a new battleground for misinformation, where fabricated content can easily overwhelm genuine information.

This erosion of trust extends beyond individuals to institutions. News organizations, political figures, and even businesses are vulnerable to damage from deepfakes, potentially jeopardizing their reputations and credibility in the public eye.

Detection and Countermeasures

Developing effective methods for detecting deepfakes is a critical challenge. Researchers are actively exploring various techniques, including sophisticated image and video analysis tools. These tools can often identify subtle inconsistencies or artifacts in the synthetic media that betray its artificial origin. However, staying ahead of the curve requires ongoing development and adaptation of these technologies.

Ethical Considerations and Legal Frameworks

The rapid advancement of deepfake technology necessitates a robust ethical framework. Serious questions arise about intellectual property rights, privacy, and the potential for misuse. Current legal frameworks are often ill-equipped to address the complexities of deepfake creation and distribution. This calls for proactive measures to address the ethical dilemmas and establish appropriate legal safeguards.

The Future of Deepfakes: A Call for Responsibility

The future of deepfakes hinges on a collective effort to promote responsible innovation and technological advancement. This requires a multi-faceted approach involving education, technological advancements, and legislative action to mitigate the risks. It is crucial to raise public awareness about the potential dangers of deepfakes and empower individuals with the tools and knowledge to recognize and report these manipulations. The fight against deepfakes is a continuous challenge demanding vigilance and collaboration from all stakeholders.

The Role of Transparency and Accountability

Transparency in Generative AI Models

Transparency is crucial in evaluating the ethical implications of generative AI. Understanding how these models arrive at their outputs is vital. Lack of transparency can lead to the propagation of biases present in the training data, potentially perpetuating harmful stereotypes or misinformation. Furthermore, a lack of transparency makes it difficult to identify and correct errors in the model's output, thereby compromising the reliability and trustworthiness of the generated content.

Examining the internal workings of generative AI models, while challenging, is essential for building public trust. This involves making accessible the datasets used for training, the algorithms employed, and the methodologies used to evaluate the model's performance. Open-source models and clear documentation are critical steps in achieving this transparency.

Accountability for Generative AI Outputs

Establishing clear lines of accountability is paramount when dealing with generative AI. Determining who is responsible for the content created by these models is a complex issue. Is it the developer, the user, or the platform hosting the model? Establishing clear guidelines and protocols for handling potentially harmful outputs is critical.

This accountability extends beyond the initial creation of the model. Continuous monitoring and evaluation are necessary to identify and address emerging ethical concerns. Mechanisms for feedback and redress are also essential for addressing issues arising from the use of generative AI.

Bias Mitigation in Generative AI

Generative AI models are trained on vast datasets, which often reflect existing societal biases. These biases can be inadvertently amplified and perpetuated by the models, leading to discriminatory outputs. Addressing these biases requires careful consideration of the training data and the algorithms used to create the models.

Techniques for detecting and mitigating bias in generative AI models are constantly evolving. This includes analyzing the data for disparities, implementing algorithms that actively counter bias, and developing methods for evaluating the model's output for fairness and equity. Continuous monitoring and adaptation of these techniques are essential to ensure responsible AI development.

Impact on Copyright and Intellectual Property

The ability of generative AI to create novel content raises complex questions about copyright and intellectual property. If a model creates a piece of art or text, who owns the rights to that work? The development of clear legal frameworks is vital to navigate this emerging area of law and ensure fair compensation for creators.

The Role of Human Oversight in AI

Human oversight and intervention are essential in the development and deployment of generative AI. While AI models can automate many tasks, human judgment and ethical considerations remain critical in contexts such as content moderation and safety. Human review of outputs is often necessary to ensure that AI-generated content aligns with ethical guidelines and societal values.

Human oversight is particularly critical in ensuring that generative AI models are not used to create harmful content, such as deepfakes or misinformation. The need for human judgment in interpreting and responding to AI-generated content cannot be overstated.

Ensuring Ethical Data Collection and Usage

The data used to train generative AI models must be collected and used ethically. This includes respecting privacy rights, obtaining informed consent, and ensuring data security. Transparency in data collection practices is essential to build trust and accountability in the AI development process.

Ethical considerations regarding data ownership, access, and usage must be carefully considered throughout the entire lifecycle of a generative AI project. Failure to address these issues could lead to severe ethical and legal repercussions.

The Future of Generative AI Ethics

The rapid advancement of generative AI necessitates ongoing dialogue and collaboration amongst researchers, policymakers, and the public to shape its ethical trajectory. Continuous evaluation and adaptation of ethical guidelines are essential to address unforeseen challenges and maintain public trust. The development of robust frameworks for responsible AI development is crucial for harnessing the potential of this technology while mitigating its risks.

International cooperation and standardization of ethical guidelines for generative AI will be important as the technology becomes more widely adopted across different societies and cultures. This will ensure a common understanding and application of ethical principles in the global landscape of AI.

Mitigating the Risks: A Multi-Faceted Approach

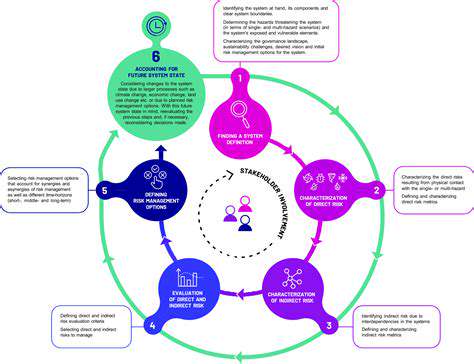

Understanding the Potential Threats

Identifying and understanding the potential risks associated with any project or endeavor is crucial for effective mitigation. This involves a thorough analysis of the various factors that could hinder progress or lead to negative outcomes. A comprehensive risk assessment should consider internal and external influences, and not just focus on obvious dangers. For example, a new market entry might seem straightforward but could be hampered by unforeseen regulatory changes or shifts in consumer preferences. A proactive approach to risk assessment is essential.

Thorough research and investigation are critical to identifying potential problems. This includes analyzing industry trends, competitor activities, and potential financial impacts. Failure to adequately assess potential threats can lead to significant setbacks and financial losses. Proactive identification of potential issues allows for strategic planning and resource allocation to mitigate those risks.

Developing Contingency Plans

Once potential risks are identified, a key step involves developing contingency plans. These plans outline the actions to be taken should a specific risk materialize. This proactive approach allows for a swift and organized response, minimizing the impact of the adverse event. Contingency plans should be flexible and adaptable, accommodating potential variations in the situation.

A well-structured contingency plan should cover a range of potential scenarios. It should include clear communication protocols, designated responsibilities, and a timeline for implementation. Contingency planning should also be regularly reviewed and updated to reflect changing circumstances. This adaptability is crucial for effectiveness.

Implementing Robust Controls

Implementing robust controls is a critical component of risk mitigation. These controls can range from simple preventative measures to more complex procedures. The goal is to minimize the likelihood of a risk occurring or to limit its potential impact if it does occur. Effective controls are tailored to the specific risks identified in the assessment process.

Monitoring and Evaluation

Monitoring and evaluating the effectiveness of implemented controls is essential for continuous improvement. Regular reviews of the implemented measures can reveal areas where improvements are needed. This iterative process ensures that the risk mitigation strategy remains relevant and effective over time. Consistent monitoring allows for adjustments to the plan as needed, preventing stagnation and maintaining a high level of security.

Communication and Collaboration

Effective communication and collaboration among all stakeholders are fundamental to successful risk mitigation. Clear and consistent communication ensures that everyone is aware of the risks, the mitigation strategies, and their respective roles in the process. This collaborative approach fosters shared understanding and accountability. Open channels of communication facilitate the rapid dissemination of information and enable quick responses to emerging threats.

Leveraging Expertise

Seeking and leveraging expertise from relevant professionals can significantly enhance risk mitigation efforts. Consulting with experts in the field can provide valuable insights and perspectives, helping to identify potential risks that might otherwise be overlooked. Experts can also offer guidance on implementing effective controls and developing appropriate contingency plans. This external input is invaluable in navigating complex situations.

Adapting to Change

The business environment is constantly evolving, and risk mitigation strategies must adapt accordingly. Staying informed about industry trends, emerging technologies, and potential regulatory changes is essential for maintaining a proactive approach to risk management. Adapting to change allows organizations to anticipate and address new threats before they significantly impact operations. This dynamic approach ensures that risk mitigation strategies remain effective in the face of evolving circumstances.